What is LLM Token Counter?

LLM Token Counter is a sophisticated tool meticulously crafted to assist users in effectively managing token limits for a diverse array of widely-adopted Language Models (LLMs), including GPT-3.5, GPT-4, Claude-3, Llama-3, and many others.

Feature

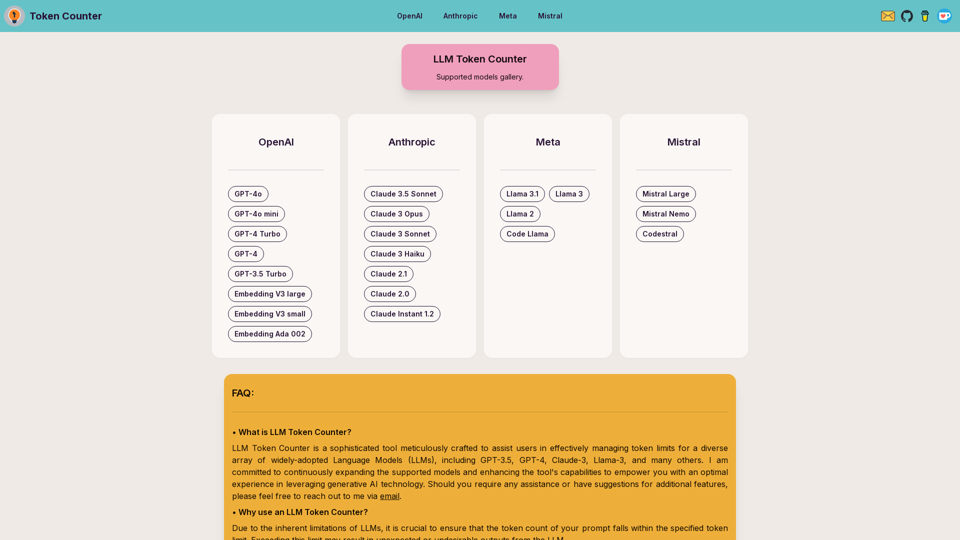

The LLM Token Counter supports a vast array of Language Models, including but not limited to OpenAI's GPT-4o, GPT-4o mini, GPT-4 Turbo, GPT-4, GPT-3.5 Turbo, Embedding V3 large, Embedding V3 small, Embedding Ada 002, Anthropic, Claude 3.5 Sonnet, Claude 3 Opus, Claude 3 Sonnet, Claude 3 Haiku, Claude 2.1, Claude 2.0, Claude Instant 1.2, MetaLlama 3.1, Llama 3, Llama 2, Code Llama, Mistral, Mistral Large, Mistral Nemo, Codestral.

How to use LLM Token Counter

LLM Token Counter works by utilizing Transformers.js, a JavaScript implementation of the renowned Hugging Face Transformers library. Tokenizers are loaded directly in your browser, enabling the token count calculation to be performed client-side. Thanks to the efficient Rust implementation of the Transformers library, the token count calculation is remarkably fast.

Price

The LLM Token Counter is a free tool, and you can use it without any subscription or payment.

Helpful Tips

To maximize your use of the LLM Token Counter, make sure to keep your prompt concise and within the specified token limit. This will ensure that you get the most accurate and desirable outputs from the Language Models.

Frequently Asked Questions

What is LLM Token Counter?

LLM Token Counter is a sophisticated tool meticulously crafted to assist users in effectively managing token limits for a diverse array of widely-adopted Language Models (LLMs), including GPT-3.5, GPT-4, Claude-3, Llama-3, and many others.

Why use an LLM Token Counter?

Due to the inherent limitations of LLMs, it is crucial to ensure that the token count of your prompt falls within the specified token limit. Exceeding this limit may result in unexpected or undesirable outputs from the LLM.

Will I leak my prompt?

No, you will not leak your prompt. The token count calculation is performed client-side, ensuring that your prompt remains secure and confidential. Your data privacy is of utmost importance, and this approach guarantees that your sensitive information is never transmitted to the server or any external entity.